Who Oversees AI Business Operations?

AI governance in business operations has undergone a dramatic shift. Organisations face complex challenges as AI becomes crucial for strategic decisions1. Only 1% of business leaders view their companies as truly mature in AI deployment1.

Corporate AI investments are booming. 92% of companies plan to increase AI spending over the next three years1. This rapid growth raises questions about AI business process management and regulation.

Estimates suggest a £3.5 trillion opportunity in productivity growth through corporate AI use1. Workplace dynamics are changing as employees become more familiar with generative AI tools.

94% of employees and 99% of C-suite leaders report some familiarity with these technologies1. However, significant gaps exist between leadership perceptions and actual AI implementation.

AI oversight requires a nuanced approach. 34% of employees expect to use generative AI for over 30% of their work tasks within a year1. Leadership perspectives on this vary considerably.

The challenge lies in bridging these perception gaps. Developing robust frameworks for responsible AI business operations is crucial. Employee attitudes toward AI remain complex.

41% of workers express apprehension about AI1. This indicates a clear need for supportive integration strategies. Effective governance is key to managing technological transformation and workforce expectations.

The Evolution of AI Governance in Business

AI governance has transformed from theory to business practice. Since 1956, AI’s journey has been revolutionary. The Dartmouth Summer Research Project on Artificial Intelligence marked its beginning2.

Business leaders now see AI integration as crucial. 77% believe generative AI is market-ready and vital for competitiveness2. This shows a major shift in how firms approach tech innovation.

Historical Development of AI Oversight

AI governance has seen key milestones over the years. These include:

- 1956: The Dartmouth Conference – Birth of AI as a research field

- 2010-2020: Emergence of machine learning algorithms

- 2023: Mainstream adoption of generative AI tools

Shift from Theory to Business Implementation

Companies are taking AI seriously. 60% of CEOs are exploring new AI policies to reduce risks2. 59% have appointed staff to oversee AI integration2.

This shows a strategic approach to AI governance. Firms are adapting to the new tech landscape.

Key Milestones in AI Business Integration

AI’s role in business has grown rapidly. 47% of organisations have set up generative AI ethics councils2. AI ethics spending rose from 2.9% in 2022 to 4.6% in 20242.

These trends show the importance of responsible AI use. However, challenges remain in this field.

Only 21% of executives say their AI governance is systemic or innovative2. This shows the need for stronger AI governance frameworks.

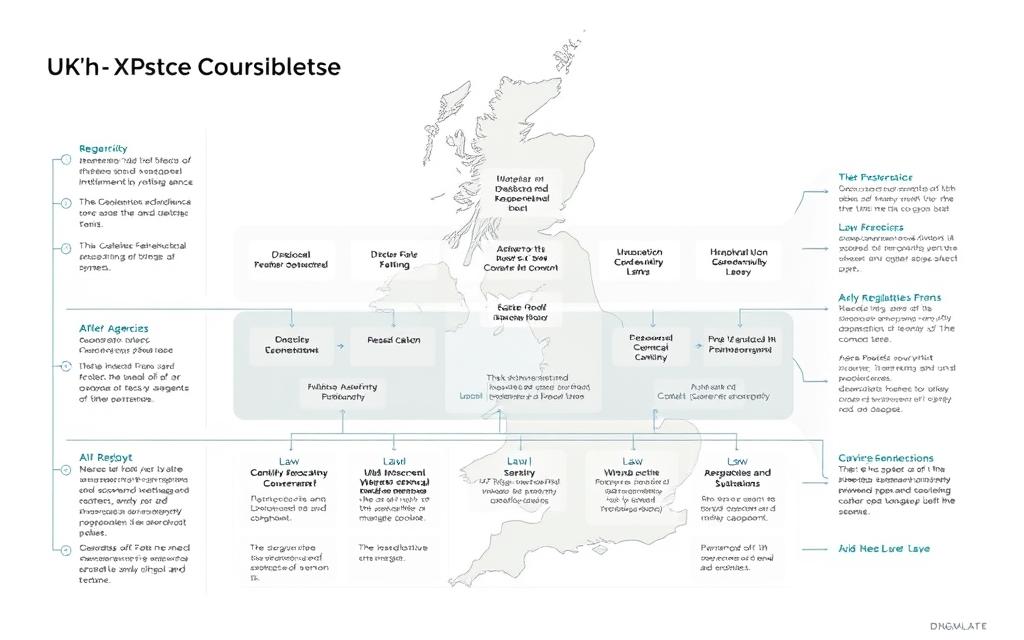

Understanding the UK’s Regulatory Framework for AI

The UK has crafted a unique approach to AI regulation. It balances innovation with strong governance. The government’s white paper, “A pro-innovation approach to AI regulation”, outlines this forward-thinking framework3.

The UK’s AI framework is based on five key principles. These include safety, transparency, fairness, accountability, and contestability4. This approach aims to create an environment where AI can thrive ethically.

The National AI Strategy focuses on fostering AI growth. It also maintains high ethical standards3. The UK aims to drive prosperity through innovative AI technologies.

This framework seeks to build public trust in AI3. It addresses potential risks like algorithmic bias. Past incidents, such as Amazon’s biased AI recruiting tool, highlight the need for thorough governance4.

The UK’s approach represents a delicate balance between fostering technological innovation and ensuring responsible AI development.

The government’s consultation on the white paper ended in June 2023. This shows a commitment to collaborative AI policy3. By 2030, the UK aims to become a science and tech superpower.

- Safety, security, and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress4

Who is in Charge of AIs Business Matters

AI business oversight involves government agencies, corporate leaders, and specialised committees. The UK is creating a strong framework for responsible AI use across sectors. Strategic governance mechanisms are key to this process.

AI governance requires teamwork among various stakeholders. This collaboration helps manage new tech challenges effectively.

Government Regulatory Bodies

Regulatory bodies are vital for AI business oversight. Key organisations monitoring AI include:

- Information Commissioner’s Office (ICO)

- Financial Conduct Authority (FCA)

- Office of Communications (Ofcom)

These agencies are expanding their roles to tackle AI-related issues. The government has set up a Central Function within DSIT to coordinate AI governance.

This new body oversees AI management across different sectors5. It ensures a unified approach to AI regulation and development.

Corporate Leadership Responsibilities

Corporate AI leadership is crucial for ethical and compliant strategies. Organisations must create strong frameworks to address risks and ensure responsible innovation.

Effective AI governance balances tech progress with ethical concerns.

Industry-Specific Oversight Committees

Specialised committees provide targeted oversight in various industries. These groups create sector-specific guidelines for unique AI challenges6.

Government regulators, corporate leaders, and industry committees work together. This teamwork ensures responsible management of AI business operations.

Global AI Governance Standards and Compliance

Global AI governance is evolving rapidly. International efforts focus on establishing standards for responsible AI development. The UK leads in driving global AI standards7.

International compliance in AI emerges through collaborative initiatives. The National Standards Strategy for Critical and Emerging Technology stresses creating safe, universal, and interoperable technology across jurisdictions7.

- Developing human-centered AI standards

- Ensuring technical soundness and market responsiveness

- Addressing potential risks in AI implementation

International bodies are advancing AI governance. The International Association of Insurance Supervisors represents over 200 jurisdictions. It plays a crucial role in establishing AI compliance frameworks8.

| AI Governance Focus Areas | Key Considerations |

|---|---|

| Data Protection | Ensuring privacy and confidentiality |

| Ethical Standards | Preventing algorithmic bias |

| Risk Management | Addressing potential technological vulnerabilities |

Global AI governance acknowledges unique regional challenges. Low- and middle-income countries aim to leverage AI innovations. They also focus on mitigating risks like employment disruptions and cybersecurity threats7.

The future of AI governance relies on collaborative, transparent, and consensus-driven standards that prioritise human rights and societal well-being.

The Role of Existing Regulators in AI Supervision

The UK’s regulatory landscape is changing to address AI supervision challenges across sectors. Existing regulatory bodies are adapting their strategies for comprehensive AI oversight. This shift aims to manage the revolutionary impact of AI on business operations.

The government has allocated £10 million to enhance regulatory capabilities for AI governance. This funding supports regulators in developing robust frameworks for sector-specific oversight. It’s a significant step towards addressing the complex challenges of AI regulation.

Financial Conduct Authority (FCA)

The FCA is taking a proactive stance on AI challenges in financial services. In October 2022, they published a Discussion Paper seeking feedback on AI adoption. This initiative aims to shape the future of AI regulation in finance.

- Ensuring fair and ethical AI implementation

- Mitigating potential biases in financial decision-making

- Protecting consumer interests through transparent AI processes

Information Commissioner’s Office (ICO)

The ICO is crucial in protecting data privacy and addressing ethical concerns around AI technologies. Their oversight focuses on key areas of data protection and privacy.

- Data protection and privacy standards

- Preventing algorithmic discrimination

- Ensuring transparency in AI data processing

Office of Communications (Ofcom)

Ofcom is broadening its scope to tackle AI challenges in communication technologies. The regulator is developing comprehensive guidelines to manage AI’s impact on digital communications9.

| Regulatory Body | Primary AI Supervision Focus | Key Challenges |

|---|---|---|

| FCA | Financial Services | Ethical AI Implementation |

| ICO | Data Protection | Privacy and Algorithmic Bias |

| Ofcom | Digital Communications | Technology Governance |

These regulatory bodies are set to publish their AI regulation strategies by 30th April 2024. This deadline signifies a strong commitment to responsible AI supervision across the UK10.

Implementation of AI Safety and Security Measures

The UK Government is preparing targeted regulatory interventions for advanced AI development. These focus on critical aspects of AI safety and security measures. They address transparency, data quality, and risk management across various technological platforms11.

The National Institute of Standards and Technology has released an AI Risk Management Framework. This guide helps companies establish robust AI governance11. AI security strategies now require comprehensive approaches to mitigate potential risks.

Companies should set internal standards and rigorously test AI models for safety and fairness11. Adversarial testing can enhance AI systems’ robustness against potential failures11. Organisations must also vet AI providers, examining their training methods and responsible AI practices11.

Cybersecurity is crucial in AI implementation. AI might increase cyberattacks, especially on critical infrastructure like power grids12. Strong cybersecurity measures are vital to protect AI systems from vulnerabilities13.

Regular algorithmic audits ensure fairness and reduce ethical risks in workplace AI technologies13. Ongoing training programmes help employees interact safely with AI tools13. Clear data policies address privacy risks and maintain technological integrity13.

As AI evolves, businesses must stay adaptable and committed to responsible innovation. This approach ensures the safe and ethical use of AI technologies.

FAQ

What is the current state of AI governance in UK businesses?

Who is responsible for overseeing AI operations in UK businesses?

How are UK regulators preparing to manage AI technologies?

What are the key principles of the UK’s AI governance approach?

How does the UK’s AI governance compare to global standards?

What challenges do businesses face in implementing AI governance?

Are there specific safety measures for AI development in businesses?

How are corporate leaders involved in AI governance?

Source Links

- https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work

- https://www.ibm.com/thought-leadership/institute-business-value/en-us/report/ai-governance

- https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach

- https://www.ukcybersecuritycouncil.org.uk/thought-leadership/papers/ethics-in-artificial-intelligence/

- https://aisel.aisnet.org/cais/

- https://www.stress.org/workplace-stress/

- https://airc.nist.gov/docs/NIST.AI.100-5.Global-Plan.ipd.pdf

- https://www.iais.org/uploads/2024/11/Draft-Application-Paper-on-the-supervision-of-artificial-intelligence.pdf

- https://securiti.ai/ai-regulations-around-the-world/

- https://www.bdo.co.uk/en-gb/insights/industries/financial-services/artificial-intelligence-opportunity-risk-and-regulation-in-financial-services

- https://www.ropesgray.com/en/insights/alerts/2024/05/guidance-for-implementing-responsible-ai-in-legal-and-business-practice

- https://www.safe.ai/ai-risk

- https://www.protex.ai/guides/the-complete-guide-to-ai-safety-in-the-workplace